From Punch Cards to the Cloud

By Yovko Lambrev 2020-02-19

From the time of punch cards to the present days, the world of IT is moving towards the concept that more and more small and autonomous components can be configured in a working system.

In less than a century, machines that performed only one task at a given period of time and programs waited in line to be executed in batches, became multiprocessing, multitasking, and multithreaded. And with the help of additional tools such as containers and virtualization, once monolithic big software applications can now exist as cloud systems of autonomous building blocks and microservices. Their constituent components can be built using different technologies, can be distributed geographically on different continents, and can automatically increase or reduce their capacity according to the load they have to deal with. Тhis flexibility wasn't achievable until recently, and on top of this, today, it is possible this complexity to be concealed at various levels so that people with very different skills can use it. Nowadays, business departments can do much more work alone without waiting for the IT guys to help them.

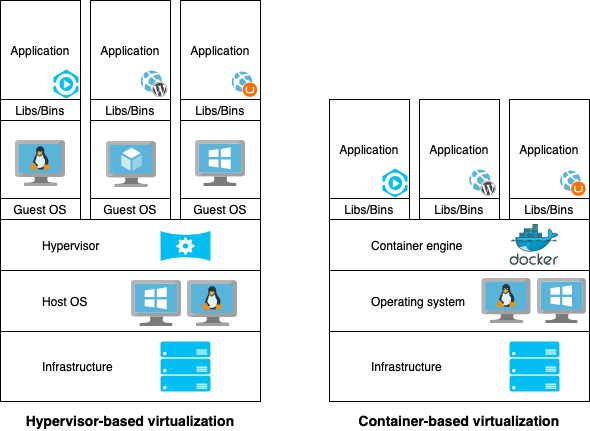

Today's modern data centers are equipped with powerful multiprocessor servers, and a virtualization software or firmware layer called hypervisor distributes their computing resources among so-called virtual servers. Each of which behaves like a completely separate system. They can be given to different clients and perform various tasks independently and isolated from each other, even though they are housed in the same physical machine.

Moreover, virtualization allows a group of multiple physically separate servers to aggregate their resources and capacity to form more powerful virtual machines than each of them individually.

In some cases virtualization has a cost because the hypervisor eats some of the resources for itself, and each virtual server, using its own copy of the operating system (OS) and application stack, consumes disk space for this purpose. Especially in the case of similar or identical virtual servers, this can be unnecessarily inefficient. Better scenario is when the hypervisor is a firmware and implemented running on its own silicon like the bare metal machines that can run software hypervisor on top of its own.

Despite that, for single and monolithic software systems, virtualization is often convenient, easy, and preferred approach for deployment and subsequent operation and maintenance.

However, convenience and ease disappear if managed applications increase in number or are made up of multiple polyglot components built as microservices. Then the use of containers is life-saving.

In practice, containerization is a type of OS-level virtualization that allows applications or components to be completely isolated from one another, but without the need for a separate virtual machine for each of them. They share the kernel on the host operating system and contain dependencies on libraries or other components that they need to run. In this way, the containers encapsulate microservices or applications that can be quickly and easily launched, stopped, moved, or even multiplied from one system (or cloud) to another

All this can be automated to provide greater autonomy or flexibility in the face of changing operating conditions. Facilitates the process of implementing changes, fixes, and new versions. It is straightforward to reproduce an identical copy of a given system for testing or development purposes.

Additionally, container management tools on clusters of real or virtual servers can provide even more flexibility to manage the deployment and operation of complex multi-component systems.

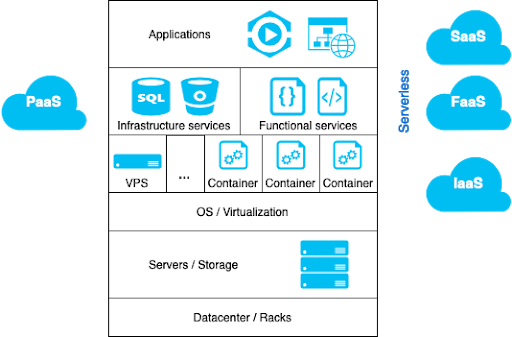

And if we look at today's datacenter, we will see the usual racks with servers and storage, but underneath, the software infrastructure will look like this:

The hardware layer (servers and storage) is often managed by some Linux operating system that has a virtualization hypervisor installed on top of it. Thanks to this, the computing resources are configured among the virtual servers for various purposes. Hosting companies and cloud vendors that provide infrastructure (or IaaS - Infrastructure as a Service) usually work at this level.

This level requires qualified system administrators who are knowledgeable and capable of maintaining all the necessary resources at a large scale, including from a security perspective. Automation is possible, but it cannot replace administrators entirely.

As customers or developers, if we need a given database, for example, we need to install it on some rented virtual or physical server that meets the needs and configure it. Additionally, we need to think about data backups and maintenance and updating procedures. And of course, to be competent in all of this. Don't forget to have an action plan when you need more performance or capacity.

Instead, we can rent the same database from a cloud provider that will take care of most of these things, and we can focus on using it effectively. We don't even need to know where it is installed. We don't have to worry about the underlying operating system updates. This is included in the usage fee, most often measured as CPU utilization and/or storage capacity. This offering is often called Platform as a Service (PaaS).

This approach can be very convenient because it offers smooth migration from one location to another; it is easy to set-up replication, automated backups and archives, and, of course, scalability when needed. Otherwise, expensive expertise and considerable time for planning and implementation would be required, which can be hard or even impossible for small businesses or startups.

Often, this concept is called serverless, although the servers are still there but remain invisible to the users.

If you are a development department (or a software company) and want to impress your boss or customer with speed, flexibility, and cost, you can take advantage of another level of abstraction. You can design your solution as a system of microservices and components, even third-party ones. Each can be written in a different programming language, can have different relationships and communication interfaces with the others, and can be executed asynchronously. Instead of trying to compile all the features in one core application, each function can be coded and deployed independently on the cloud or even on different clouds. Again without thinking about the infrastructure under your code. Such cloud offerings are called FaaS (or Function as a Service). And you (or our customer) need to pay a usage fee, which is most often determined by the number of times these functions are executed and/or the CPU time and memory used.

This approach is incredibly convenient when you want to complete some tasks very quickly because the cloud can run hundreds of copies of a given function simultaneously to process multiple concurrent requests. The provider will take care of the allocation of the resources, configuration of the required infrastructure underneath, etc. You can concentrate your efforts on your code.

And of course, at the top of this concept is the SaaS (Software as a Service). It can be implemented over both monolithic or serverless architectures. SaaS so far looks like the most appropriate business model for the cloud, which replaces software licensing (on premise) with a subscription fee, calculated most often per-user or based on resources used.